YouTube Is Covering for Sniffy. Here’s The Proof.

YouTube is deleting “dislikes” to artificially bolster the approval ratings of nearly all of the videos on the official YouTube channel of the Biden White House. Below are the receipts that prove it.

Once a new Biden White House video is about 6-12 hours old, YouTube begins deleting dislikes from the video. They continue to delete additional dislikes at fairly regular intervals after that. The end result is that Biden regime videos appear to be much more popular than they really are, though they are still almost entirely upside down. In contrast, YouTube videos from the Trump White House usually had net positive approval ratios.

Dial F for Fraud.

Twitter users, including journalists, have been posting screenshots of this phenomenon for at least two months now.

A few months back, researcher Zoe Phin did the first numerical analysis of the phenomenon I witnessed. She charted likes/dislikes over time and noticed large discontinuities in the charts. In Phin’s original post, she produced the following chart of the likes, dislikes, and total views over time on a White House video featuring Jen Psaki:

In the above chart, notice that the red line, which represents dislikes, increases very organically, then drops suddenly by a large amount once the video is about nine hours old. After that large initial drop, there are periodic drops that seem to correspond to more batches of “dislikes” being deleted, and that seem aimed at keeping the dislikes below some threshold of a maximum number of dislikes.

After seeing Phin’s work, I decided to take her approach and expand on it. I signed up for permission to use the YouTube API (a service YouTube provides for querying statistical data about YouTube videos), and I built a system to monitor all YouTube videos published by the Biden White House and to produce comprehensive charts and statistics at 81m.org. I made all the data fully open so that anyone can use it in their own research.

The name “81m.org” is a reference to the supposed fact that Joe Biden received 81 million votes in the 2020 US general election, becoming, supposedly, the most popular president of all time. Being so popular, it’s strange that he seems to need YouTube’s public relations assistance.

The Real Dislikes.

I began calculating and charting a new statistic that I call the real dislikes. The real dislikes are a total of all increases to dislikes, ignoring the large decreases. I also calculate the real likes with the same method, but so far I have not found any discrepancy between the real likes and the official likes that YouTube reports.

The charts reveal huge discrepancies between the real dislikes and the dislikes reported officially by YouTube. The following two charts suggest YouTube is performing heavy manipulation on behalf of the Biden White House, and that it gets worse with time:

The following table of a running tabulation from 81m.org shows that dislikes are periodically deleted (deletions circled in yellow):

In some cases, the level of statistical bolstering becomes absurd.

On the video titled “President Biden & Vice President Harris Meet with Xavier Becerra, Alejandro Mayorkas, & Advisors,” the official YouTube approval ratio is 44.95% at the time of writing. The real approval rating, if we ignore all the dislikes that YouTube has deleted, is 2.54%. YouTube appears to want its users to believe this video has over seventeen times the approval rating that it really enjoys.

To achieve this, YouTube has deleted nearly all the dislikes the video has received since it was published, with about 96.8% of all dislikes deleted so far. The true dislikes would be 3,030% higher if YouTube hadn’t deleted so many. I call this 3,030% figure the “manipulation amount” on 81m.org, for lack of a better term.

Another video, titled “Vice President Harris Ceremonially Swears In Xavier Becerra as Secretary of Health & Human Services”, is even worse.

The official YouTube approval rating is 61.77% at the time of writing, while the real approval rating is only 2.38%. It seems that YouTube deleted nearly all of the 9,141 dislikes this video received, leaving only 138 dislikes on the video. That’s a manipulation amount of 6,523%, which is truly ridiculous.

Typically, YouTube seems to delete two thirds or more of the dislikes on the Biden regime’s videos.

How About on Other YouTube Channels?

Thus far, I haven’t found any evidence of YouTube doing similar deletions for other channels.

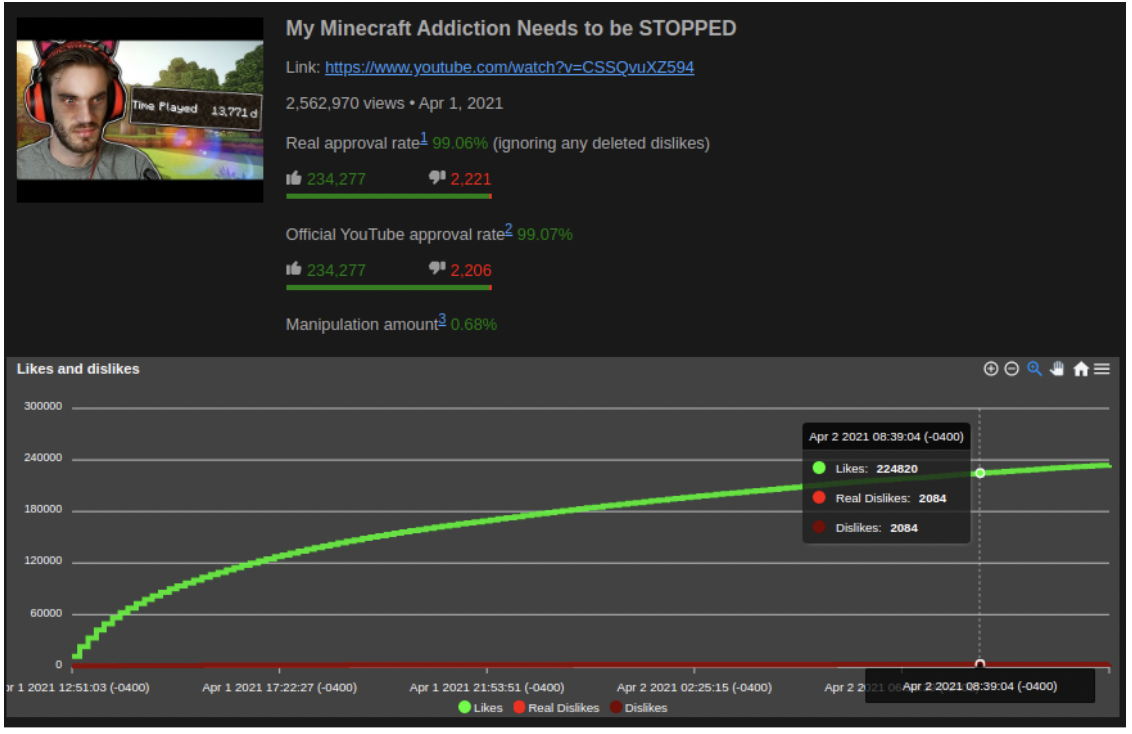

For comparison purposes, I started doing the same tracking on the videos of popular YouTube user PewDiePie.

PewDiePie has a reputation for being somewhat controversial, so I thought that there was a chance I would see some irregularity in his charts, some “fixing” by YouTube of “targeted dislike campaigns” directed toward him. However, I never noticed any irregularity at all in any of PewDiePie’s charts. On his videos, my real dislikes and YouTube’s official dislikes always line up perfectly, which seems to be a validation of my methodology.

I tracked a drama-free, mid-sized YouTuber as well, and found no manipulation on any of his videos, other than microscopic – usually less than 1% differences.

Today, I began tracking Senator Ted Cruz’ official YouTube channel, so we’ll see if we find any oddities in his statistics. Based on what I’ve seen so far today, I don’t think we will find any, but time will tell.

One Possible Explanation (But Not Really).

I saw a purported explanation of the dislike-deletion phenomenon on Y Combinator’s Hacker News, a website that considers itself a highbrow, startup-focused alternative to Reddit, where Silicon Valley’s aspiring entrepreneurs discuss their interests.

A Hacker News commenter explained that all of the Trump White House’s YouTube subscribers were transferred over to the Biden White House by YouTube at the request of the Biden regime. I was able to locate a Twitter comment that backs up that assertion, made by Rob Flaherty, Director of Digital Strategy for the White House under the Biden regime. Here is the exchange between two Hacker News users discussing the phenomenon:

YouTube transferred all the Trump’s WH subs to Biden’s WH – while Trump’s WH had started with zero. Very stupid idea and apparently requested by the Biden admin. Now you have millions of Trump subs just disliking every video.

If that’s true, it might explain the phenomenon of the massive dislike ratios, but how does it excuse the behavior of deleting users’ dislikes?

Force-joining Trump subscribers to the Biden regime’s YouTube channel, and then deleting those users’ negative reactions to the content shoved before them, only serves to express the sentiment, You will be forced to look at the content we put before you, but you will not be allowed to express your dislike of it.

In fact, it’s far more insidious than that: You will be allowed to express your opinion, but we’ll send your contribution down the memory hole a short while later, and you’ll be none the wiser.

These YouTube users will feel satisfied for a moment when they see the dislike button light up after they’ve clicked it and they see the dislike count increase by one, but their contribution will be deleted silently a few hours later, long after they have navigated away. However, as I will explain later, they likely will still see that the dislike button is highlighted (that is, pressed) when they return.

Are the dislikes by Trump supporters who were forced to follow Biden’s White House guilty of executing a “targeted dislike campaign?”

YouTube forces all of the Trump White House’s YouTube subscribers to subscribe to the Biden White House at the Biden regime’s request, and it backfires on them when the Trump followers react negatively to each video, and then YouTube declares that those users are part of a “targeted dislike campaign?” If that turned out to be the case, that’s pretty rich.

If this version of events is true, perhaps YouTube thought they were doing the Biden regime a favor by giving them all of Trump’s followers. When that turned out to go badly, perhaps they were stuck between a rock and a hard place. They had to choose whether to remove all of those followers (which would be bad optics) or remove their negative effect (better optics, until they’re found out).

Flashbacks to November 4th.

In the 2020 general election, social media users reported seeing evidence of election fraud in live vote totals, when vote counts suddenly shot way up (for Biden) or way down (for Trump) in zero time. I do not know if any of that purported evidence was valid, or if was only a mirage, but a comment I keep seeing is that my charts are reminiscent of the election-night charts: Initially, there is the expected and orderly organic growth of chart lines, followed by sudden hard-to-explain changes.

Here are two such comments:

“When you invert this it become[s] election results..”

“Vertical lines in graphs give me nightmares..”

After genuine public sentiment is expressed through voting, the public rightfully becomes suspicious when there is seemingly a manipulation of results and then a refusal to consider the possibility that manipulation could have happened.

In fact, in the case of the 2020 US general election, there was a maniacal obsession to hide any artifacts which could question the legitimacy of the tabulation.

Policy Impact?

Does the Biden White House know about the manipulation that YouTube may be engaged in, on their behalf?

I’d like to ask that question of Rob Flaherty, Director of Digital Strategy for the White House. If the White House is aware, then they’re not being very transparent for a White House that promised total transparency. As The National Pulse previously reported, “In press secretary Jen Psaki’s first press briefing, she told reporters that the Biden presidency heralded a commitment ‘to bring transparency and truth back to the government; to share the truth, even when it’s hard to hear.’”

Maybe 95% of dislikes are just a little bit too much truth and just a little bit too hard to hear, so those need to be swept under the rug.

If the White House isn’t aware of the manipulation that YouTube is doing in their favor, then is YouTube putting a hidden finger on the scale, putting the White House in a bubble, insulating the White House from the true amount of negative sentiment they are receiving from a segment of the public?

Either way, this serves as yet another example of Big Tech companies doing similar manipulation, attempting to manufacture consent on behalf of the Biden regime.

YouTube Won’t Respond, But They Will React.

I would love to hear a direct response from YouTube on this. However, the only “response” I actually expect is that next we’ll see YouTube prevent the likes and dislikes from being observed or measured at all. (Every countable artifact that disagrees with the “truth” must be hidden.)

Soon, YouTube might disable the ability to view dislikes entirely.

Alternatively, or in addition, YouTube might simply deny access to the YouTube API, which would prevent us from tracking YouTube’s data, or YouTube might block all API keys from accessing data on Biden regime videos, citing some sort of violation of the YouTube terms of service. It is certainly within YouTube’s legal rights to do any of those things, though it is ethically wrong since they purport, when convenient, to be an open platform.

These days, the only response the dissenting public ever seems to get is no response at all, or a promise to circle back that is never delivered upon.

If YouTube were to issue a response saying, “We didn’t delete dislikes,” then that would be a misleading partial truth, as I will explain in more detail later, since they are likely deleting dislikes from totals while technically keeping an internal record of the dislikes.

Who is Executing the Dislike Deletion?

Someone or some group of people at YouTube is deleting dislikes for the Biden regime, and they’re doing it in a very obvious way.

How many people would it take to carry out this task of deleting dislikes? In my professional opinion, it wouldn’t take more than one person, though that certainly doesn’t mean that multiple people aren’t involved. In my career, I have consulted on software security and software architecture for some of the largest tech companies in Silicon Valley, and I have also designed and built complicated and critical back-end infrastructure for several small enterprises. I know how these large software systems work, and I also have some idea of how executive, management, and engineering teams function together.

From an engineering perspective, the dislike deletion effect we’re seeing would be a very easy job to pull off. Doing so wouldn’t require any modification of YouTube’s existing back-end (or front-end) code. A single coder with the right access could accomplish it, simply by writing a short program in a general-purpose scripting language, the sort of thing coders have to make all the time in the course of a normal workday or workweek.

Internally, likes and dislikes are not simply stored as a total count; instead, the likes and dislikes are recorded in a running ledger as events. As a simplified human-readable example, a “dislike” event might be stored as, At 1:23pm on March 26, 2021, user #123,456,789 disliked video with ID MN8BLP-DEt0.

The running totals of likes and dislikes are updated live as these events are created, and those running totals are displayed on the video’s web page. For the example I just gave, the total number of dislikes would simply be incremented by one.

In order for the coder at YouTube to “correct” for the unwanted dislikes present on a video, the coder’s script could simply do one of two things: It could delete a large number of “dislike” events and re-run the code that calculates and stores the totals; or, it could manually set the calculated total to a lower number while doing nothing to the stored “dislike” events. The latter approach is probably the simplest and best since it achieves the desired result while making it so that individual users continue to see that they have disliked the video (avoiding their suspicion), so I suspect that the latter.

Either way, the goal is accomplished: unwanted dislikes effectively deleted, unapproved revolt effectively quashed. The result of either method would look exactly like what we are seeing in the charts of the Biden regime’s videos. Any moderately experienced programmer who is reading this will know that what I am saying is accurate.

The official dislikes counted by the YouTube API also reflect the manipulation. However, 81m.org has already taken a snapshot of all the values as they rose and fell, which is why I am able to calculate and chart the real dislikes statistic.

Why Not Fake Likes, Instead?

Some might wonder, “If YouTube is willing to delete dislikes, then why wouldn’t they also manufacture likes? Why aren’t we also seeing likes suddenly shoot up in the charts?” Manufacturing likes is a higher level of deceit. If someone at YouTube believes that the unwanted dislikes (though in fact genuine and organic) are the result of a “targeted dislike campaign,” then that person will feel justified in deleting them.

However, what would be the justification for creating fake likes? The folks at YouTube would have to believe that anyone who failed to like the video was part of a “targeted forgot-to-like campaign.” Honestly, I wouldn’t be surprised to see this happen one day at the rate we’re going, but you see my point.

Technically, in the former method described above, the coder would have to generate a series of “like” events, and each event would have to be ascribed to some real (or manufactured) YouTube user. Which user IDs would the user select to be the assignees of these new “like” events? That is not a simple question. In the latter, more likely method where the totals are updated directly, likes could be created simply by artificially increasing the total.

Based on what I know of the culture inside Big Tech, I doubt a single lone coder would extend his neck so far without instruction from above.